Release time: 2024-07-14

Without the "brain" of a multimodal big model, the "body" is just a mechanical device without intelligence.

The following article is from AI Technology Review, written by Chen Luyi.

How is the “intelligence” of embodied intelligence manifested?

This is one of the most frequently mentioned topics when visiting many researchers in this field since Leifeng.com-AI Technology Review launched the "Ten People Talk about Embodied Intelligence" column.

In short, embodied intelligence refers to a technology that combines intelligent systems with physical entities to enable them to perceive the environment, make decisions, and perform actions. The key word is "embodied", which means that it is not just abstract algorithms and data, but interacts with the world through physical form.

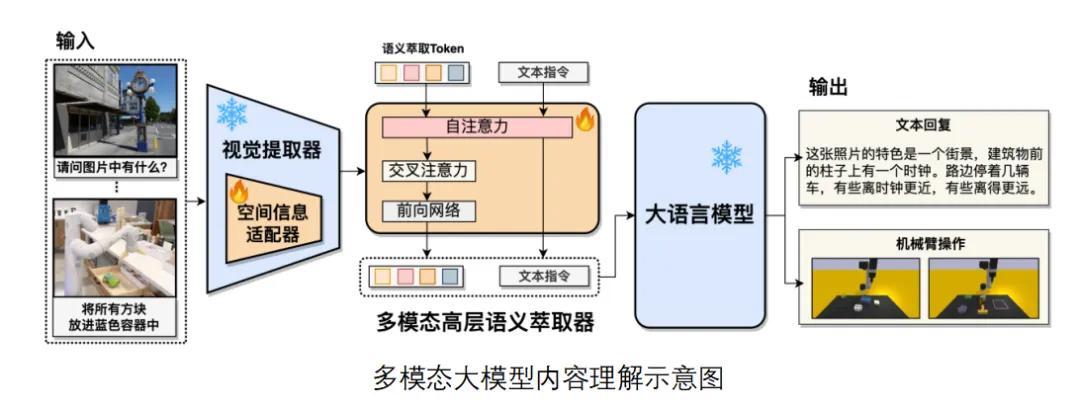

However, to achieve true "intelligence", embodied intelligent systems need a powerful "brain" to support their complex decision-making and learning processes. The "brain" here is not an organ in the biological sense, but refers to an advanced computing model that can process and understand multimodal information - a multimodal large model. This model can integrate multiple sensory data such as vision, hearing, and touch, as well as abstract information such as language and instructions, to provide robots with a richer and more comprehensive ability to understand the environment.

In November 2022, the advent of ChatGPT demonstrated a breakthrough in large language models (LLMs), which not only inspired unlimited imagination of the application of large models in various industries, but also pushed "embodied intelligence" into the spotlight, triggering in-depth discussions on how machines can interact more naturally with humans and the environment, and inspiring a new wave of multimodal large model research.

Natural language processing (NLP) is one of the underlying core technologies of large models. Harbin Institute of Technology is a well-established engineering school with strong NLP research and rich technical accumulation in large model research. Jiutian, Harbin Institute of Technology's self-developed, autonomous and controllable multimodal large model, has attracted widespread attention in the industry. Jiutian has the remarkable characteristics of wide modal coverage, top multimodal data sets, strong modal connection capabilities, and strong scalability. It performs well in many evaluation indicators. Jiutian's papers on video-text processing and image-text processing won the Best Paper Award of ACM MM 2022.

The multimodal big model and embodied intelligence research at HIT is led by Professor Nie Liqiang, who has focused on multimodal content analysis and understanding for the past 15 years and is convinced of the importance of multimodal perception, fusion and understanding. He realized that traditional robots have weak autonomous decision-making capabilities, while multimodal big models are good at understanding decisions but cannot interact with the physical world. This inspired him to combine the two, using the robot as the torso and the multimodal big model as the brain to achieve complementary advantages.

Some people believe that multimodal large model technology will promote the rapid upgrading of the robot's "brain", and its evolution speed will far exceed that of the robot itself. It may surpass the technology maturity point and enter the stage of large-scale industrial implementation in the next 2 to 3 years.

Recently, AI Technology Review visited Professor Nie Liqiang and discussed with him topics such as research trends in the field of embodied intelligence and the challenges faced by the integration of industry, academia and research. The following is a transcript of the interview between AI Technology Review and Nie Liqiang on the topic of embodied intelligence. Due to space limitations, AI Technology Review has edited the original meaning:

"Brain" drives the development of embodied intelligence

AI Technology Review: What do you think of the recent embodied intelligence craze? When people are researching and discussing embodied intelligence, what are their expectations for technology and applications?

Nie Liqiang: The embodied intelligence craze is the result of the combination of artificial intelligence big model technology and robotics technology. The breakthrough of big model technology in artificial intelligence provides robots with a new "brain", and the interaction between robots and the physical world also brings new focus to big models. The two promote each other and complement each other's advantages.

Research trends in the field of embodied intelligence are also constantly changing. In the initial stage of big model empowerment, some work directly applied new achievements in the field of artificial intelligence to robots, but it was not in-depth enough. For example, common modalities of multimodal big models are vision and text, but robots are exposed to a wider range of information - vision, hearing, touch, human instructions, the position of the robot arm, etc. In the future, big models need to adapt to the characteristics of embodied intelligence tasks in the physical real world to perceive and interact, and integrate rich multi-modal information.

Recently, research on embodied intelligence driven by big models has gradually deepened, moving from preliminary applications to deep integration, especially the integration of robot motion control, which is the key to technological development and also a major challenge. As research deepens, we expect big models to more comprehensively understand and control the robot's body and achieve deeper physical interaction.

If the challenges in the field of embodied intelligence are effectively solved, its application potential is huge. Embodied intelligence applications can integrate intelligent bodies into various vertical fields such as intelligent manufacturing and service industries, such as industrial inspections and housekeeping services, so that embodied intelligence can lead the upgrade of new manufacturing, service and other industries. As the technology matures, its application scenarios will become more extensive.

AI Technology Review: What role do multimodal large models play in embodied intelligence?

Nie Liqiang: The multimodal big model is the "brain" of the embodied intelligent robot and is of vital importance. It is located at the upstream of development and provides intelligence for the robot. Without this "brain", the downstream robot "body" is just a mechanical device without intelligence. The powerful multimodal big model is the key driving force for the development of the embodied intelligence field .

The multimodal large model transcends the limitation that a single modality is not enough to cope with complex actual scenarios, greatly improving the robot's perception and understanding capabilities, enabling the robot to understand complex scenarios and tasks more accurately and comprehensively. In addition, the multimodal large model has learned a wealth of human knowledge after large-scale data pre-training, giving the robot the ability to make autonomous plans and decisions.

The multimodal large model also optimizes human-machine interaction. It allows robots to accurately understand human intentions through multimodal information such as voice and gestures, making the interaction between us and robots more natural. The powerful generalization ability of the multimodal large model also lays the foundation for the robot's autonomous learning ability, helping the robot adapt to changing tasks, and taking a big step towards becoming a truly intelligent entity with the ability to autonomously learn and adapt to environmental changes.

I believe that the multimodal large model, as the "brain", affects all aspects of the robot. Its upstream empowerment of the robot has removed the key obstacles to the implementation of embodied intelligence and is the source of progress in the field of embodied intelligence.

Future Trends: Humanization and Collaboration

AI Technology Review: What trends do you think will be the future development of multimodal large models in the field of embodied intelligence?

Nie Liqiang: The future development of multimodal big models in the field of embodied intelligence will bring revolutionary changes, making AI systems more humane in their interaction with and understanding of the physical world . It is foreseeable that the following key trends will shape this field in the coming years:

Multimodal perception : The model will seamlessly integrate multiple sensory information such as touch and smell to provide a more comprehensive understanding of the environment, close to human perception capabilities .

Model lightweighting : Develop efficient multimodal large model architectures and use model compression and knowledge distillation techniques to improve the flexibility and efficiency of embodied systems.

Transfer and few-shot learning : Embodied AI will demonstrate advances in transfer learning and few-shot learning, quickly adapting to new tasks without requiring large amounts of data for training.

Development of underlying technology : Models will better connect abstract knowledge with physical reality, promote breakthroughs in common sense reasoning and causal understanding, and enhance long-term memory and continuous learning capabilities .

Natural interaction capabilities : Improve the intuitiveness and contextual awareness of communication between people and AI machines, enabling robots to conduct complex conversations and interpret environments and actions.

World model building : Creating a comprehensive internal representation of the world for planning, prediction, and decision-making by embodied AI.

Neuromorphic computing fusion : Multimodal large models are combined with neuromorphic computing methods to simulate biological neural networks and improve energy efficiency and adaptability.

These trends suggest that in the future, embodied AI systems will become closer to humans in terms of understanding and interacting with the world through multimodal large models, opening up possibilities for a wide range of applications and fields.

AI Technology Review: What do you think is the biggest challenge currently facing large multimodal models?

Nie Liqiang: The biggest challenge of multimodal large models is how to integrate and align multiple data modalities while maintaining coherence, efficiency, and ethical considerations. Different modalities such as text, images, audio, and video have unique characteristics, and aligning them is a fundamental problem that requires effective shared representation through pre-training, fine-tuning, and architecture design.

The computational resource requirements required for large multimodal models grow exponentially with size and modality, raising issues of scalability, accessibility, and deployability that may limit the popularity of the models.

Data quality and diversity are also a significant hurdle. Acquiring large-scale, high-quality, and unbiased multimodal datasets is a time-consuming and expensive process.

The complexity of models also makes it increasingly difficult to ensure interpretability and understandability, which are critical to the trustworthiness of models in critical applications.

Finally, multimodal large models also face challenges in terms of ethics and social impact. Issues such as misinformation, deep fakes, and privacy violations require the formulation of corresponding safeguards and ethical guidelines, and more importantly, the attention and cooperation of all parties.

Collaboration between academia and industry

AI Technology Review: What do you think of the current collaboration between academia and industry in embodied intelligence research?

Nie Liqiang: Embodied intelligence research requires the combination of basic research and innovative thinking in academia with the practical experience and data of the industry to jointly overcome complex scientific and technological challenges. Many embodied companies in the past 1-2 years were incubated by universities. The increase in university-incubated companies shows the key role of academia in promoting technology commercialization.

The government's support has provided impetus for school-enterprise cooperation, and by encouraging schools and enterprises to jointly apply for projects, it has provided the necessary economic and platform support. The establishment of joint laboratories has promoted the deep integration of academia and industry, and accelerated the exchange and innovation of knowledge.

To strengthen cooperation, we need to further align academic research with industry needs, develop standardized embodied intelligence research platforms and protocols, and cultivate talents who can connect the two worlds. As educators, we have the responsibility to cultivate students' cross-border capabilities in knowledge, technology, and research methods.

Overall, the cooperation between academia and industry shows great potential in the field of embodied intelligence. Through government support, joint laboratories, and alignment of research with demand, universities and enterprises will jointly promote the innovative development of embodied intelligence.

AI Technology Review: What is the prospect of embodied intelligence in academia and industry? What specific research cases do you and your team have?

Nie Liqiang: Embodied intelligence is highly favored in both academia and industry, and has opened up a new path for cutting-edge cross-disciplinary research. Both AI researchers and robotics researchers are actively exploring this field. The industry is optimistic about the challenges and application prospects of large-scale model-enabled robots.

(Ruoyu Jiutian project unmanned kitchen scene technical verification)

HIT has made significant research progress in the field of embodied intelligence, such as the Ruoyu Jiutian project, which has achieved technical verification in unmanned kitchen scenarios and made breakthroughs in key technologies such as multimodal large models driving group intelligence. We have successfully combined multimodal large models with robot entities and developed a robot system with perception, interaction, planning and action capabilities.

In this process, we faced challenges such as multimodal information fusion, complex task planning, and precise motion control. Each step requires careful research. For example, large models must effectively process multimodal information, the robot's "brain" needs to accurately plan tasks, and the "cerebellum" is responsible for precise action execution. These research results provide a solid foundation for the application of embodied intelligence.

AI Technology Review: What are Harbin Institute of Technology’s future development plans in the field of embodied intelligence?

Nie Liqiang: At present, based on HIT's current research foundation in multimodal large models and robots, we have formulated a systematic research plan for embodied intelligence, including multiple aspects such as perception, planning, operation, and group collaboration of intelligent bodies, covering various forms of intelligent bodies such as robotic arms, drones, and humanoid robots.

In short, embodied intelligence is a promising research field. HIT will continue to promote scientific and technological innovation and talent cultivation, and strive to make greater contributions to academia and industry.

Practice of the Brain + Cerebellum Paradigm

AI Technology Review: Ruoyu Technology once proposed the slogan of "equipping robots with brains". How do you view the synergistic relationship between the brain and cerebellum, and future research directions?

Nie Liqiang: Ruoyu Technology is a high-tech company incubated from Harbin Institute of Technology. It emphasizes the collaborative work of the robot's cognitive system (brain) and motion control system (cerebellum). The multimodal large model Jiutian is responsible for handling understanding, perception, planning and decision-making tasks, while the cerebellum performs precise physical movements and interactions. This collaboration ensures that the robot can perform specific control according to high-level instructions and feed back execution to the brain to adjust the strategy, which is crucial for adaptability and robustness.

Ruoyu's future research will focus on strengthening this synergy, integrating model planning with low-level control algorithms, including developing error correction and online learning mechanisms to enable the brain to quickly adjust according to the execution results of the cerebellum, optimizing the planning of long-sequence tasks, and improving the robot's perception and decision-making capabilities through multimodal perception and adaptive learning. In addition, Ruoyu will also explore how to use the brain's high-level understanding ability to improve the performance of the cerebellum, such as guiding grasping planning or trajectory optimization through semantic understanding.

AI Technology Review: What innovations and breakthroughs has Ruoyu Technology made in multimodal big models and embodied intelligence? How is multimodal big model technology applied to products?

Nie Liqiang: Ruoyu Technology has made breakthroughs in the development of embodied intelligence driven by multimodal large models. It has innovatively implemented the cerebrum-cerebellum paradigm, integrated natural language processing, visual perception, and action planning, and enabled robots to have intelligent "brains" in multiple fields.

Core technologies include enhanced retrieval large model de-hallucination planning, which allows robots to autonomously perform complex tasks based on natural language instructions, such as order processing and serving coordination in unmanned kitchens. In terms of 3D perception, robots can understand and manipulate objects in complex environments without pre-registration, showing high flexibility and robustness.

Ruoyu Technology has also achieved imitation learning driven by diffusion models, enabling robots to learn complex skills without programming. These technologies are integrated into our Jiutian robot "brain", supporting multimodal interaction, and through standardized cloud + end delivery, through API + DK (SDK), in cooperation with industry chain partners, applied to food processing, sorting, assembly and 3C industries, etc.

Ruoyu has deployed the "Jiutian" robot in special fields, using imitation learning to efficiently perform commercial tasks. In the future, Ruoyu will promote the productization of multi-agent planning according to scene requirements and realize a closed business loop under the collaboration of multiple robots.

AI Technology Review: How do you evaluate the current application effect of embodied intelligence technology in actual scenarios?

Nie Liqiang: Embodied intelligence technology has demonstrated significant benefits in many fields. In the manufacturing industry, it has improved the interactive capabilities of robots, enhanced production efficiency and flexibility, and reduced human errors. In the logistics and warehousing fields, embodied intelligent robots have optimized the classification and handling processes of items through autonomous navigation and deep learning algorithms, improving logistics speed and reducing costs.

The service industry has also witnessed the benefits of embodied intelligence, such as welcoming, ordering and delivering robots in the hotel and catering industry, which improve customer experience and save labor costs. Despite the challenges of technical costs, environmental adaptability and ethics, the application effect of embodied intelligence technology in actual scenarios is positive and shows broad prospects, but it still needs to be continuously improved and optimized to adapt to the ever-changing market needs.

Ruoyu Technology and Harbin Institute of Technology jointly obtained support from Shenzhen KQ high-l

Ruoyu brand renewal: comprehensive upgrade of VI system

With the upgrade of corporate strategy, Ruoyu Technology has synchronously adjusted and updated its entire brand image, including brand LOGO, brand color and brand official website.

Good news! Professor Zhang Min, Chief Scientist and Co-founder of Ruoyu Technology, was elected as A

On the 11th, the list of newly elected Fellows for 2024 was announced. Professor Zhang Min, Chief Scientist of Ruoyu Technology, was selected as an ACL Fellow.

Professor Zhang Min, co-founder of Ruoyu Technology, and his team won the "Qian Weichang Chines

From November 29 to December 1, 2024, the 2024 Annual Academic Conference of the Chinese Society for Chinese Information Processing and the 3rd National Conference on Large Model Intelligent Generatio

Ruoyu Technology is listed in the "Investor Network 2024 China Value Enterprise List"

Recently, the highly anticipated "Investor Network · 2024 China Value Enterprise List" was officially announced.

Ruoyu Technology: Strengthening intellectual property protection and consolidating the foundation fo

Recently, Ruoyu Technology has made a series of progress in improving its corporate strength. The first invention patent in the field of embodied robot brain was authorized, and the Ruoyu Jiutian trad

Ruoyu Jiutian Robot Brain Receives Attention from Overseas Media

Recently, Ruoyu Technology launched the Ruoyu Jiutian robot brain, which realized group intelligence driven by a multimodal large model, and verified the technical solution through an unmanned kitchen

Ruoyu Technology: Striving to Become a Pioneer in Artificial Intelligence Construction

Three robots cooking together, the "black technology" behind it is group intelligence driven by a multimodal large model, in simple terms, it is "one brain, multiple bodies".

HIT incubated Ruoyu Technology to launch robot brain, realizing group intelligence driven by multimo

In recent years, the rapid development of AI big model technology has achieved results comparable to or even exceeding those of humans in some niche fields.

Copyright@ Ruoyu Technology Powered by EyouCms 粤ICP备2023060245号-2 粤公网安备44030902003927号